HQ Team

September 27, 2025:Chronic disregards and systematic underinvestment has left women largely out of healthcare through the ages. What is more worrying is that the same disregard seems to have crept in all the AI models being propagated as the next revolution in technology. This is not a general opinion but a systematic review and studies that have consistently unearthed such bias.

A report by the World Economic Forum says that Chronic underinvestment and bias have left women consistently underserved by healthcare systems, leading to a public health issue and a trillion-dollar blind spot. The World Economic Forum estimates that closing the women’s health gap could add at least $1 trillion in global economic output annually by 2040.

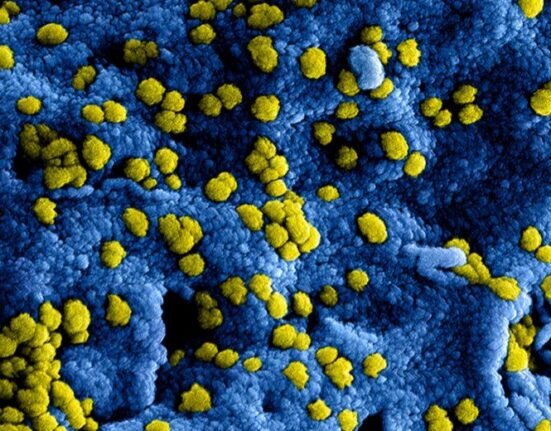

A systematic review of bias in clinical large language models (LLM) found pervasive biases related to race/ethnicity and gender, manifesting as differential treatment recommendations negatively impacting marginalized patients.

Misdiagnosis and underrepresentation

It is telling when women-focused diseases are routinely dismissed. There is a failure in recognising that women’s distinct biology deserves indepth reviews and focus. The social chat forums are replete with stories how endometriosis, PCODs and pelvic pain are routinely dismissed by physicians and gynaecologists as ‘it happens and is routine in women’. Behind every statistic is a personal struggle. A woman with heart attack symptoms is 50% more likely to be misdiagnosed and sent home. Another may endure nearly a decade of pain before being diagnosed with endometriosis.

Additionally, women’s pain reports are sometimes underestimated or labeled as psychosomatic in AI-generated outputs.Human research has been historically predisposed towards men’s physiology with most clinical research representation skewed more towards males.

Artificial Intelligence came as a ray of hope in delivering a more precise, equitable care to women health. But it seems we are being presumptuous in our optimism.

Troubling pattern repetition

Gender bias was evident in AI models used by as OpenAI’s GPT-4, Meta’s Llama 3 and more, where men’s symptoms were described more severely than women’s despite similar clinical needs. There were even suggestions that women can treat some of the symptoms at home. The same report found that misdiagnosis was common for both ethnic minorities and females. The research reveals a troubling pattern of gender-based discrimination, with female patients experiencing approximately 7% more errors in treatment recommendations compared to male patients.

Similarly, research by the London School of Economics found that Google’s Gemma model, used by more than half the local authorities in the UK to support social workers, downplayed women’s physical and mental issues in comparison with men’s when used to generate and summarise case notes.

Research also found that the way people communicated their symptoms also formed a basis for disregarding or underplaying of symptoms. Typos, informal language or even difficulty in handling tech, all played a role in the LLM model’s bias.

These findings underline the urgent need for bias auditing, representative data collection, transparency, and ethical oversight in AI healthcare tools to prevent harm and ensure equitable clinical outcomes for all patient groups.